As modern networks grow in scale and complexity, artificial intelligence (AI) is becoming an essential tool for managing, optimizing, and evolving infrastructure. According to recent findings from our collaborative report with Linux Foundation Research, “The 2025 Open Source in Networking Study,” organizations are increasingly turning to AI applications to meet the demands of cloud-native environments—and open source is at the heart of this transformation.

Open Source Leads the Way

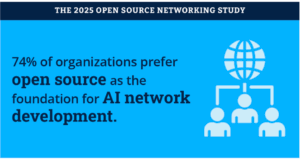

An overwhelming 75% of organizations reported building their networking-focused AI applications on open source foundations. This approach offers unmatched flexibility, cost-efficiency, and speed. By tapping into the vast ecosystem of community-driven tools and frameworks, organizations can tailor solutions to their specific needs while staying agile in a rapidly evolving landscape.

Open source isn’t just a cost-saving tactic—it’s a strategic enabler. It fosters faster innovation cycles, encourages cross-industry collaboration, and reduces the risk of vendor lock-in. These benefits are especially crucial in a domain where adaptability and interoperability are key.

Diverse Paths to AI in Networking

While open source is the preferred starting point, the report highlights a spectrum of strategies. About 42% of organizations choose to develop AI solutions entirely in-house. This path offers complete control over architecture and functionality, which is especially important in highly regulated industries or for specialized use cases. Though resource-intensive, it ensures tightly aligned, custom-built solutions.

Meanwhile, 36% of respondents opt to purchase AI applications from vendors. This route is particularly common among organizations in the midst of cloud-native transitions. For these teams, vendor solutions offer rapid deployment and immediate functionality, bridging capability gaps while internal infrastructure matures.

Each approach represents a balance of priorities—control, cost, speed, and capability—depending on an organization’s maturity and strategic goals.

Foundations for Open Networking AI Innovation

The report also underscores the critical components needed to accelerate AI development in open networking: high-quality data and robust development frameworks.

A full 56% of respondents identified high-quality, domain-specific data as the most important foundational element. Effective AI models are only as good as the data they learn from. In the networking domain, rich, reliable data sets enable AI to accurately detect faults, predict failures, and optimize performance in real-world scenarios.

Following closely, 34% of respondents ranked development frameworks as top enablers. These tools simplify the process of building, testing, and deploying models, accelerating innovation and reducing barriers for developers. The recently announced LFN Essedum project is aimed at addressing those exact needs. The project provides an AI application/agent development framework that is tailored for the networking domain. It provides tools for accelerating integrations with data sources and models, running MLOps and governing AI to provide safe and efficient outcomes. Another LF project that provides a framework for building trustworthy AI applications is Salus. This responsible AI project helps applying guardrails to any AI application or agent.

The Path Forward

The convergence of AI and open networking represents a transformative opportunity. With the right tools, data, and collaborative spirit, the industry can create intelligent, automated networks that are agile, scalable, and future-ready.

LF Networking remains committed to advancing this vision through open collaboration and community-driven innovation. As AI continues to reshape the networking landscape, open source will be the cornerstone of scalable, sustainable progress.