‘Tis the season for 2026 forecasts—and for looking back at what 2025 brought us, including our annual “report card” on last year’s predictions. In 2025, LFN drove the next phase of industry evolution by enabling intelligent, automated, and sustainable networks built on open innovation. Read on for a look at where we’re headed next, and how our 2025 predictions stacked up.

- AI-Native Networks Cross the Chasm:Autonomous Networks Transition on track to cross 50% Mark Across Core, Edge and RAN

- AI-Driven Automation Matures: The application of AIOps will move beyond initial trials, delivering tangible benefits for network service providers and hyperscalers.

- AI-Native RAN Innovation: The Radio Access Network (RAN) will see significant AI innovation, primarily fueled by the availability of comprehensive end-to-end open source implementations (from projects like OCUDU, Duranta, and OSC), democratizing the development of cutting-edge solutions.

- Networks shift from “AI-enabled” to AI-native: planning, optimization, remediation, and energy management run autonomously.

- AIOps moves from trials to production; L4–L5 autonomous stacks start shipping.

- Operations milestone: ~40% of enterprises experience routine “zero-human” network days; AI agents run NOCs.

2. API & Agents Become the Revenue Engine

- APIs + agents monetize decades of network complexity/technical debt with consumable services specifically in the area of Fraud and digital identity

- Specialized, collaborative AI agents become the standard AIOps pattern—trust, security, and policy are first-class. However they will require AI Frameworks (like Essedum, Salus) to build on

- Agentic AI will not have a major impact on Network Capacity Demands – more content is generated, but the traffic on the network will be mostly text based (prompts and responses) AI frameworks take center stage over raw model hype.

3. Edge AI Goes On-Device and Data stays at the Edge

- “Cloud → edge → device”: edge inference hits ~50% of enterprise AI workloads; OEMs standardize local AI.

- Breakthroughs in quantization usher in the first trillion-token-class model on a smartphone; “personal LLMs” become a category.

- New low-latency use cases land in healthcare, manufacturing, and automotive where cloud-only falls short.

- “Personal LLMs” become a new category

- Can’t afford (cost and latency) to send data from Edge to Cloud for AI apps.

4. Sovereignty Shapes Architecture; AI Data Centers Boom In-Country creating Networking Software Opportunities

- Geopolitical and regulatory mandates (e.g., CRA/NIST and country-specific rules) drive in-country data & AI architectures.

- Nations fund massive domestic AI data centers, stretching datacenter fabrics and AI-optimized networking at scale.

- Open source networking remains foundational and widely adoptable. (eg SONiC)

5. Open Source Software Steals the Spotlight (Efficiency Era)

- Post-5G hardware investment, attention swings to software efficiency; “do more with less” across existing hardware (following the 80s and 90s revolution of x86 and Linux)

- End-to-end open source RAN stacks (e.g., OCUDU, Duranta, OSC) democratize innovation and speed deployment.

- 2026 will be a quiet year for networking (calm before the storm of 6G)

- Excitement around LLM and Agents will phase into practical use cases by domains

Hear more on what’s in store for next year:

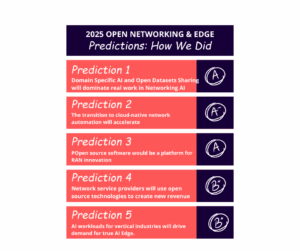

Grading 2025 Predictions: A Report Card

Prediction: Domain Specific AI and Open Datasets Sharing will dominate real work in Networking AI: Predictive AI and SLM will be applied to many aspects of designing and maintaining networks. The availability of data will become a key factor for success and the open source community will have an opportunity to expand to data sharing and innovation platforms.

Prediction: Domain Specific AI and Open Datasets Sharing will dominate real work in Networking AI: Predictive AI and SLM will be applied to many aspects of designing and maintaining networks. The availability of data will become a key factor for success and the open source community will have an opportunity to expand to data sharing and innovation platforms.

Grade: A

Operators moved from pilots to real AI in planning, optimization, and remediation—often with small/structured models (SLMs) tuned to network domains. Open datasets and shared telemetry emerged as force-multipliers for quality and reuse.

- 92% of orgs rely on open source for networking, with AI-driven automation and cloud-native leading priorities.

- Heavy Reading/Omdia’s 2025 operator study reports deployments of agentic AI and data quality workstreams aimed at autonomy.

- Real progress toward autonomy: China Mobile reported Level-4 Autonomous Networks in NOC operations using intelligent agents and TM Forum assets.

Prediction: The transition to cloud-native network automation will accelerate. Open source projects will help create new and more efficient ways to automate deployment and operation of networks that are designed to leverage the benefits of the cloud-native ecosystem.

Grade: A-

GitOps, intent, and analytics pipelines on Kubernetes matured; operators scaled CNFs and CI/CD for networks while tying automation to energy and MTTR outcomes.

- LFN survey: cloud-native adoption and automation are central priorities; open source stacks are viewed as critical to agility and interoperability.

- An Amdocs Network Operator survey shows AIOps moving “from ambition to action,” with automation tied to measurable KPIs (incidents, MTTR).

Prediction: Open source software would be a platform for RAN innovation. The transition to the next generation will be more gradual this time, and open source Radio Access projects, especially for CU/DU will be a crucial enabler, lowering the bar for research and commercial product offerings.

Grade: A

Open RAN + open tooling lowered barriers for research and commercialization; AI-assisted RAN optimization broadened trials and selective rollouts.

- See the new Duranta project under LF Networking, as well as the Linux Foundation’s OCUDU project which

- Multiple analysts forecast strong Open RAN growth: e.g., $3.2–6.5B in 2025 with high-20s to high-30s CAGR through 2030.

- European/industry momentum plus vendor shifts underpin continued Open RAN transition and experimentation.

Prediction: Network service providers will use open source technologies to create new revenue streams. Easy to consume APIs will accelerate the adoption of new services. Projects like CAMARA will enable this new type of monetizing service provider assets. Edge Infrastructure for AI will be another new type of offering, orchestrated and managed by open source technology.

Grade: B+

Coverage is now massive; 2025 shifted from sign-ups to building channels and revenue plays. Clear momentum, monetization proof still emerging.

- GSMA Open Gateway, with CAMARA-based APIs, now covers ~65–80% of global connections; 73 operator groups / 285 networks committed by mid-2025; focus turning to monetization.

- Ericsson + 12 operators launched Aduna, a JV to commercialize network APIs (with Vonage & Google Cloud developer reach).

- Early “AI Edge” traction: new telco-aligned edge platforms launched for on-prem inference (e.g., Cisco’s Unified Edge with a Tier-1 as design partner).

Prediction: AI workloads for vertical industries will drive demand for true AI Edge. Considering the cost and scarcity of resources required for AI training and inference, Vertical industries like manufacturing will look to optimize where data is stored and workloads are executed. Constraints like data privacy, energy and cost will dictate a device-edge-cloud continuum for running AI and data workloads. Edge and Networking open source projects will be in the forefront of providing the necessary frameworks and connectivity solutions.

Grade: B+

Manufacturing, retail, and utilities are prioritizing on-site inference for latency, privacy, and cost—leading to a device-edge-cloud continuum powered by open frameworks.

- LF Edge’s Akraino releases added “Physical AI” blueprints and real-world edge AI use cases (evidence of open stacks enabling vertical deployments).

- IDC: $261B edge spend in 2025, with continued double-digit growth toward ~$378B by 2028—AI is the prime driver.

- Industry leaders anticipate more inference shifting to devices/edge, reinforcing the continuum architecture.